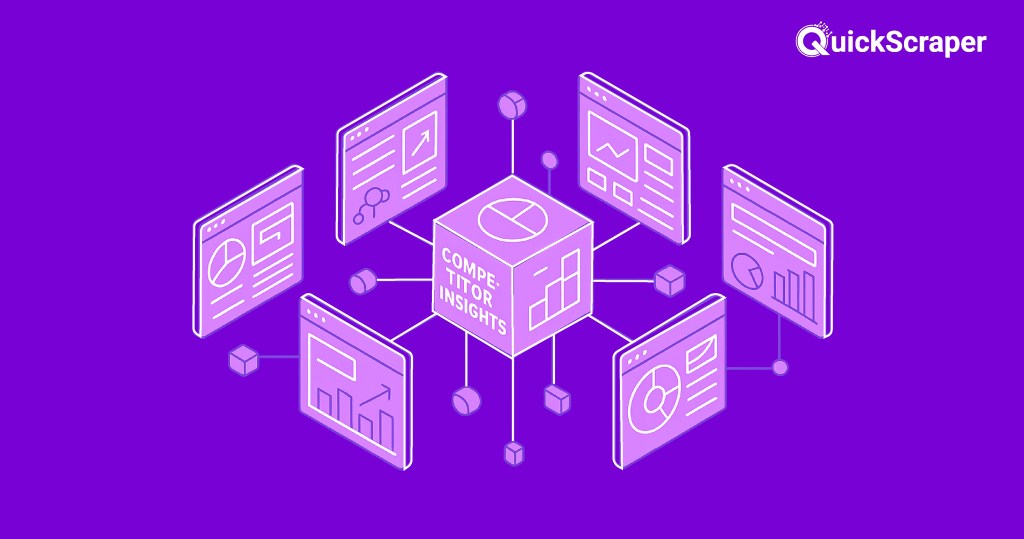

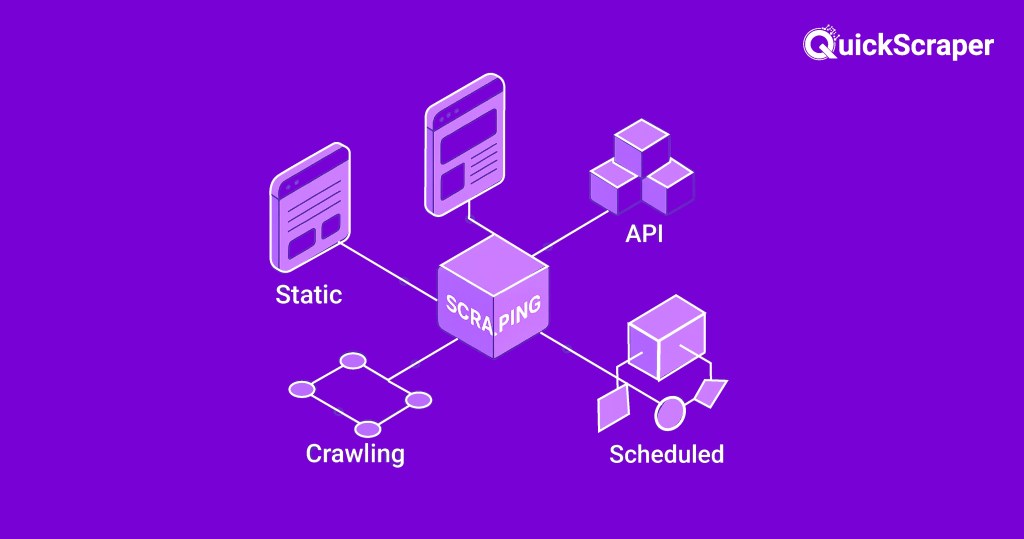

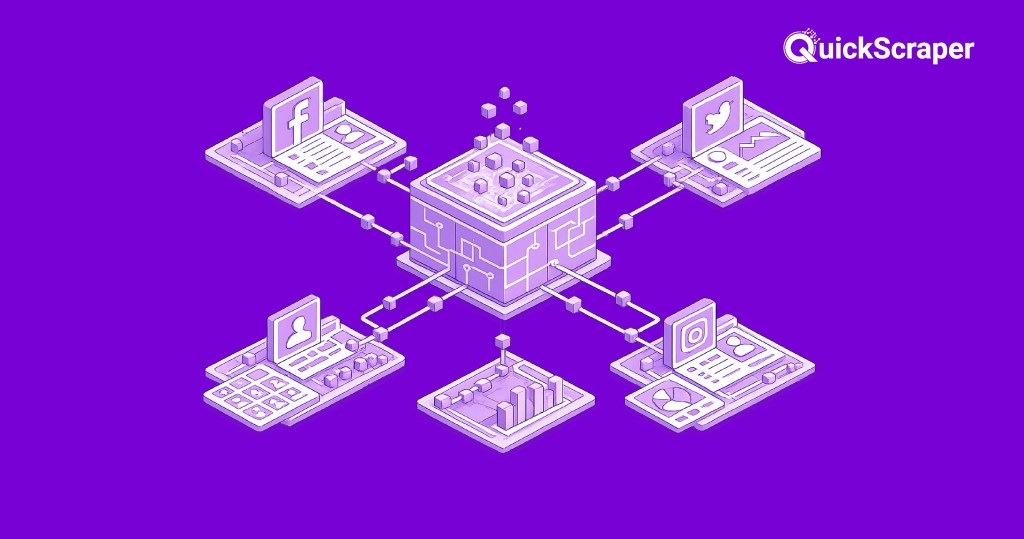

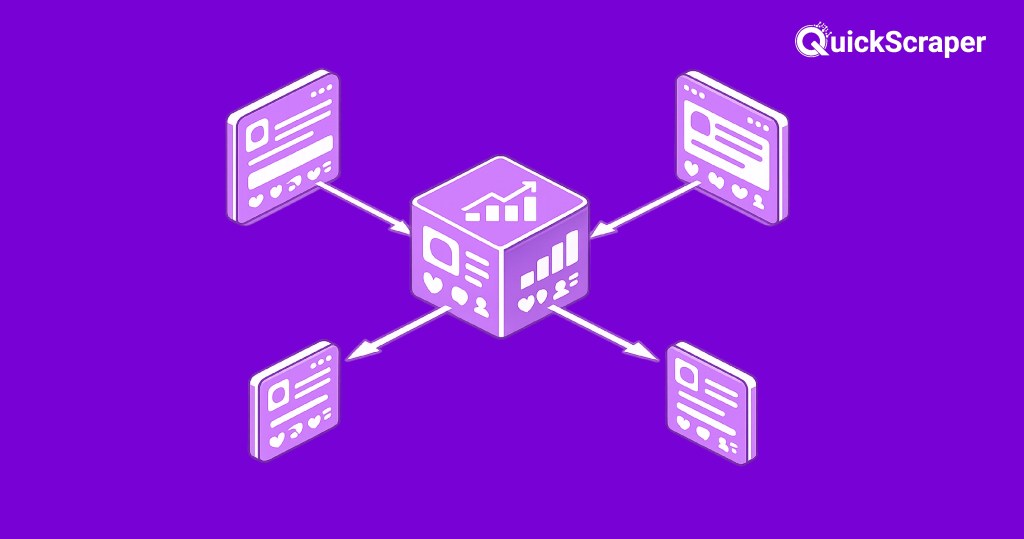

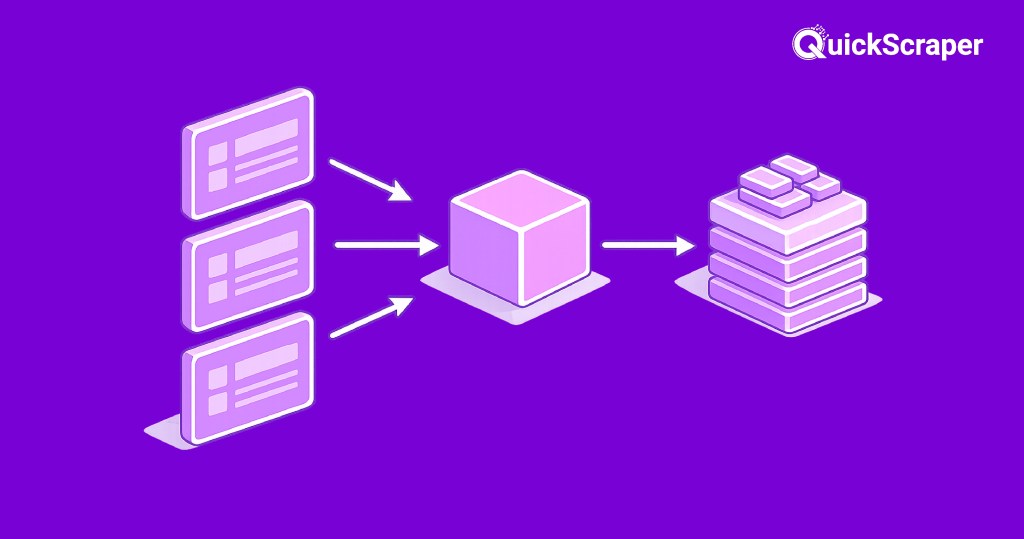

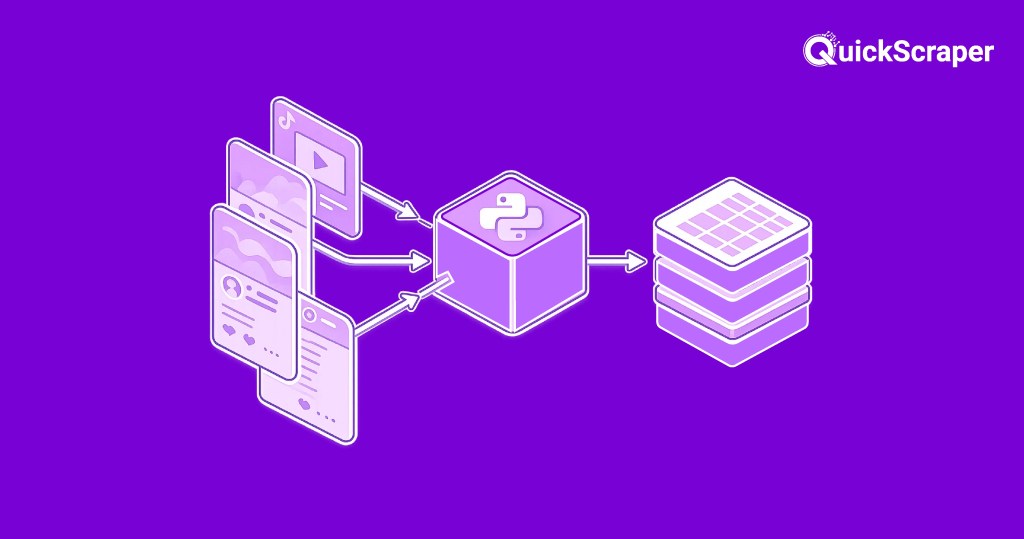

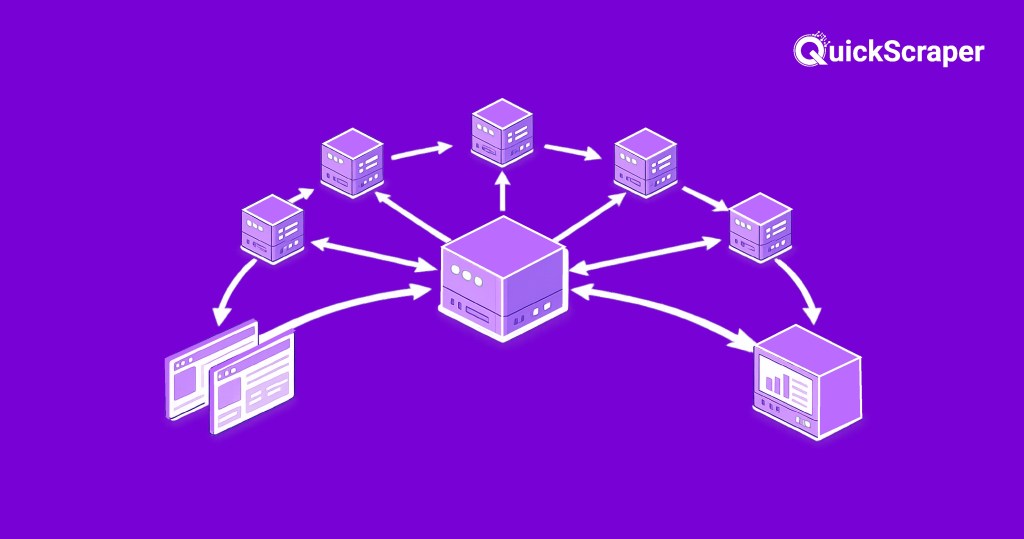

Exploring the Best Types of Web Scraping:A Comprehensive Guide Information is abundant on the internet, waiting to be harnessed for a variety of purposes. This is where web scraping comes into play—a powerful technique that allows you to extract data from websites and utilize it for analysis, research, and decision-making. In this guide, we’ll take a deep dive into some of the most valuable and popular types of web scrapers: Social Media Scrapers, E-Commerce Sites Scrapers, Business Scrapers, and Custom Website Scrapers. 1. Social Media Scrapers: Unveiling Insights from the Social Sphere Social media platforms have become virtual gold mines of user-generated content, opinions, and trends. With the right social media scrapers, you can extract this valuable data for various purposes. Let’s delve into the specifics of each: Facebook Scraper: Unearthing Public Sentiments and Interactions Facebook, the world’s largest social media platform, hosts a wealth of data that can be incredibly insightful. A Facebook scraper can be designed to collect public posts, comments, likes, and other interactions. This data is a goldmine for marketers seeking to understand audience sentiment, identify trends, and tailor their strategies accordingly. For researchers, it’s a treasure trove of information about public opinions and behaviors. Twitter Scraper: Capturing Real-Time Conversations and Trends Twitter’s fast-paced environment makes it a hub for real-time conversations and trends. A Twitter scraper allows you to gather tweets, retweets, user profiles, follower information, and hashtags. This data is invaluable for researchers studying current events, sentiment analysis, and trending topics. Marketers can tap into Twitter scrapers to analyze user engagement, identify influencers, and measure the impact of their campaigns. Instagram Scraper: Visual Insights for Influencer Marketing Instagram’s visual nature sets it apart as a platform rich in images, captions, comments, and hashtags. An Instagram scraper can be designed to extract these elements, offering marketers and businesses a window into the world of influencer marketing. By collecting data on user-generated content, engagement levels, and popular hashtags, brands can refine their influencer collaborations and enhance their overall brand perception. LinkedIn Scraper: Navigating the Professional Landscape LinkedIn is a goldmine of professional information, making a LinkedIn scraper a powerful tool for recruiters, sales professionals, and businesses. These scrapers can gather data on user profiles, job titles, companies, and connections. For recruiters, it streamlines candidate sourcing and profiling. Sales teams can identify potential leads and partnerships, while businesses can analyze industry trends and competitor workforce compositions. 2. E-Commerce Sites Scrapers: Gaining Competitive Edge in Online Markets The world of e-commerce is fiercely competitive, and staying ahead requires data-driven decision-making. E-commerce site scrapers enable businesses to gather crucial insights from online marketplaces. Let’s explore the key platforms: Amazon Scraper: Unveiling Product Insights and Pricing Trends Amazon’s extensive product range and user reviews make it a prime target for e-commerce scrapers. An Amazon scraper can extract information on product details, prices, ratings, and customer reviews. Businesses can analyze this data to optimize their pricing strategies, identify trending products, and refine their marketing approaches based on consumer feedback. eBay Scraper: Tracking Listings and Bidding Activities eBay’s auction-style model and diverse product categories present unique challenges and opportunities. An eBay scraper can monitor listings, prices, bidding activities, and seller reputations. Businesses can track competitor behavior, assess price fluctuations, and determine the demand for specific products. This information empowers sellers to make informed decisions about their product offerings and pricing. Etsy Scraper: Navigating the World of Artisanal Products Etsy, a platform known for its handmade and unique products, is a niche market with its own dynamics. An Etsy scraper can collect data about product descriptions, customer reviews, and seller information. Artisans and sellers can use this data to understand market trends, optimize product descriptions, and identify potential collaborations with complementary product creators. 3. Business Scrapers: Extracting Insights from Directories and Listings For businesses seeking industry insights, competitor analysis, and market trends, business scrapers offer a direct route to valuable information. Let’s explore two essential categories: Yellow Pages Scraper: Harnessing Local Business Data Yellow Pages, an online directory of businesses, contains a wealth of information that can be harnessed with a Yellow Pages scraper. This tool can gather business contact details, addresses, services offered, and customer reviews. For local businesses and service providers, this data is instrumental in lead generation, customer engagement, and understanding their competitive landscape. Industry-specific Directories Scraper: Tailoring to Niche Needs In various industries, specialized directories provide industry-specific data. Industry-specific directory scrapers can extract information about professionals, services, and businesses within a particular sector. For instance, a healthcare directory scraper can help healthcare organizations identify doctors, clinics, and medical services. These scrapers streamline lead generation and enable businesses to target their efforts effectively. 4. Custom Website Scrapers: Versatility for Unique Data Needs Not all websites fit neatly into predefined categories. Custom website scrapers offer the flexibility to extract data from websites with unconventional structures. Here are a couple of examples: Real Estate Listings Scraper: Navigating the Real Estate Market Real estate websites often have varying layouts and structures. A custom real estate listings scraper can extract property details, prices, agent contacts, and images. This information is invaluable for both buyers and sellers, helping them make informed decisions about property investments and sales. News Article Scraper: Tracking News Trends and Insights Media outlets display news in diverse formats, making a custom news article scraper a valuable tool for researchers and media analysts. This scraper can extract headlines, articles, authors, and publication dates. By tracking news trends and extracting valuable insights, researchers can stay updated on current events and media coverage. Conclusion: Navigating the Web Scraping Landscape Web scraping is a powerful technique that opens doors to a world of data-driven insights. By understanding the various types of web scrapers available, you can tailor your approach to gather the information you need, whether it’s from social media, e-commerce sites, business directories, or custom websites. Remember that ethical considerations and compliance with website terms of use are crucial when scraping data. Armed with the right knowledge and tools, you can harness the